If you're running a business in Germany, Poland, Spain, or any non-English market, you might be invisible in ChatGPT even when you dominate your home market.

We analyzed over 10 million ChatGPT searches to understand how the AI actually finds its answers. When users ask questions in languages like German, Polish, or Spanish, ChatGPT conducts its own background research to find sources. We found that nearly half of this research happens in English, even when the original question wasn't in English.

What happens instead is German users asking about German companies getting international brands, Polish users searching for Polish services seeing global platforms instead of local leaders, and so on.

80% of internet users don't speak English as their primary language, and this bias affects all of them. Let me show you what we found in your research (and what you can do about it).

German software companies disappear in German searches

I asked ChatGPT in German about German software companies.

The response listed excellent companies. But none of them were German. Not a single German software house made the list, despite Germany having a thriving tech industry and the query being asked in German, from Germany.

Screenshot: ChatGPT using English-speaking sources, particularly Brainhub.eu for prompts in German.

But we found it’s not just Germany. This pattern holds across other non-English markets. A local business can dominate its home market and still be invisible in ChatGPT.

Ignoring Poland’s market leader

Here's an even more striking example. Ask ChatGPT in Polish using Polish IP address: "Jakie są najlepsze portale aukcyjne?" (What are the best auction portals?)

Allegro.pl is either completely missing from the response or buried far down the list.

This is Poland's dominant e-commerce platform, not a small player. It's the local market leader.

Instead, ChatGPT consistently prioritizes eBay and other global platforms.

An example can be seen on the screenshot below:

A Polish user, asking in Polish, from Poland, about auction portals gets recommendations that ignore or downplay their own market leader.

The mystery of Spanish results

Spain is a major European market with almost 48 million people. Spanish is also one of the world's most common languages. ChatGPT should handle it well, and surface English content in their answers, right?

Not quite. I asked in Spanish: "What are recommended clothing brands?"

ChatGPT recommended two Spanish brands: Zara and Mango. Not bad - except the sources ChatGPT cited were all English-language sites: Kokolete.com, Imagedbyra.com, Wikipedia, Ninghowaparel.com.

Screenshot below perfectly illustrates this:

ChatGPT is recommending brands to Spanish users in Spain based on English-language sources that promote global brands, not sources that are actually relevant to the Spanish market.

The cosmetics example shows this even more clearly. When I asked "What are the best cosmetics brands?" in Spanish, zero Spanish brands appeared.

Why? Screenshot below proves it’s because that ChatGPT chose English sources for it:

Using Peec AI to analyze how ChatGPT researched this query, we can see the actual search queries (called fan-outs) that ChatGPT used behind the scenes:

First fan-out (English): "best cosmetic brands skincare makeup top brands."

This naturally surfaces global sources, which favor global brands. That's the first bias.

Second fan-out (Spanish): "Mejores marcas de cosméticos globales alta calidad."

Translation: "Top global high-quality cosmetic brands".

Notice what happened? We asked in Spanish about cosmetics brands, but in this test example ChatGPT added "global" on its own.

In the above example, when a Spanish user asks in Spanish, from Spain, about cosmetics brands, interpreting that as a request for global brands is a design choice. It clearly excludes brands that dominate the Spanish market in favor of brands that dominate English-language content.

The bias extends to politics

This pattern isn’t limited to commercial searches. When I searched from a Polish IP address asking "what are the best political parties," ChatGPT commonly cited Deutsche Welle. It's a German media outlet that publishes content both in German and English, but for this prompt, ChatGPT used the English content as its source.

I tested the same with German political queries using German IPa address and noticed the same pattern - it commonly referenced English sources as well.

Then, I tried a French IP address with the query "Quels partis politiques sont les meilleurs" (Which political parties are the best?) - again, ChatGPT predominantly cited from English sources, particularly Fiveable.me.

Even for a topic as sensitive as politics, ChatGPT is relying on English-language sources across different countries.

ChatGPT’s hidden research process

To understand why this happens, we need to look at how ChatGPT actually finds its answers.

When you ask ChatGPT a question, commonly it doesn't just pull from memory. It breaks your question down into multiple smaller, more specific queries and searches for sources. These background queries are called query fan-outs.

We analyzed over 20 million of these fan-outs from recent months, covering more than 10 million user prompts. The pattern we found confirms that ChatGPT starts its research in the user’s language, but then switches to English.

The user asks in German: "Was sind die besten Softwareunternehmen?" (Translation: What are the best software companies?)

ChatGPT's first fan-out query: German

ChatGPT's subsequent fan-out queries: English

The AI starts in your language, then conducts a big part of its actual research in English. ChatGPT commonly mixes English fan-outs with local language fan-outs. This means users in Germany get a mix of German and English results, while users in the UK get only English results.

English content appears in both contexts, but German content only surfaces in Germany. This issue isn't limited to Germany - it affects any country where English isn't the primary language. The mixing itself creates the bias toward English-language content.

How often ChatGPT switches to English

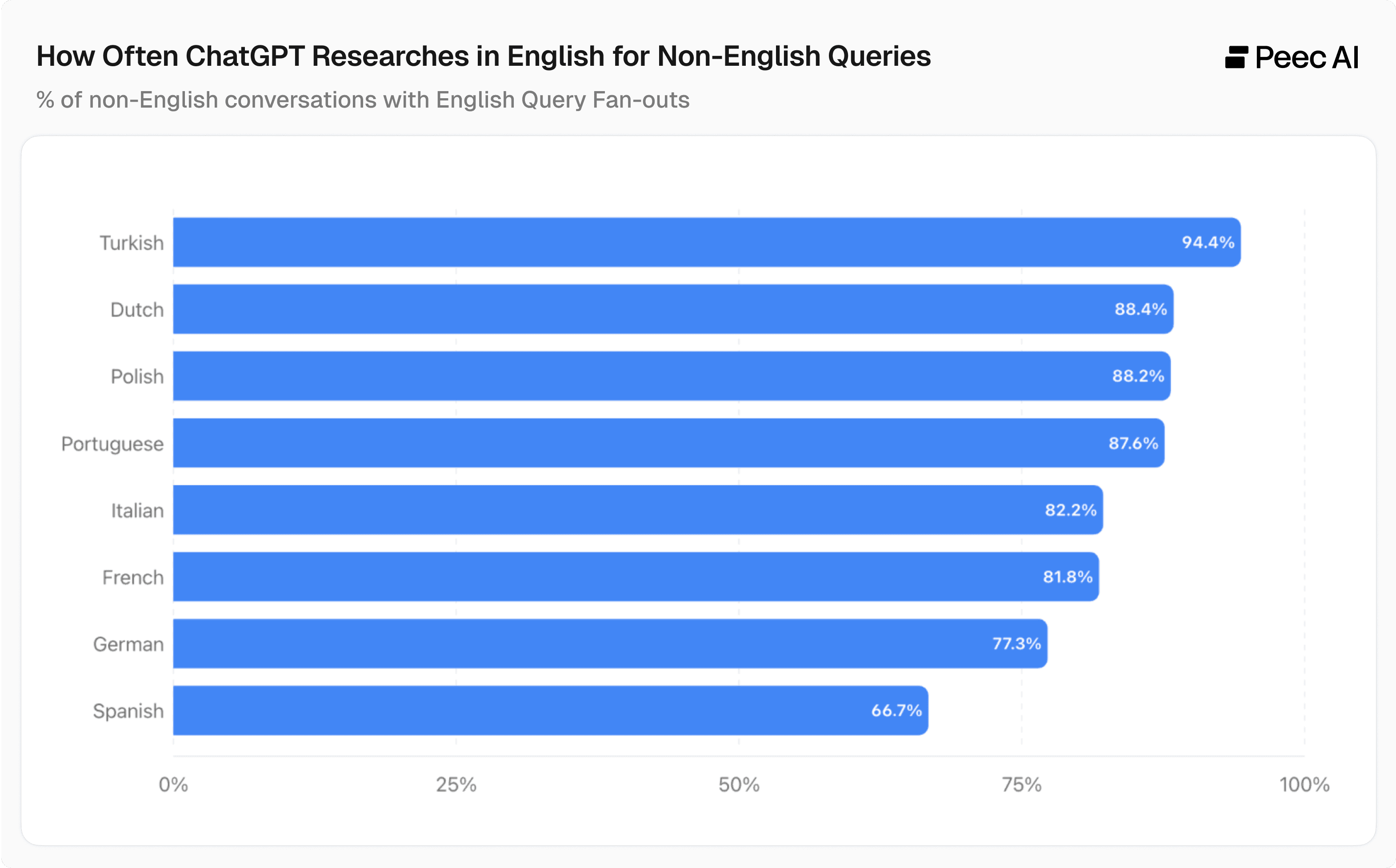

We wanted to make sure this pattern was real, not an artifact of mixed signals.

We filtered the data to include only cases where user location matched query language: Polish queries from Poland, German queries from Germany, Spanish queries from Spain. We excluded any location or language mismatches like Polish queries from the UK or German queries from Spain.

This constraint was crucial. If someone asks in German from London, ChatGPT might reasonably assume they want international results. But a German query from Germany should prioritize German sources.

For this research, we analyzed over 10 million prompts and 20 million query fan-outs from Peec AI data. The results were clear: across all non-English ChatGPT sessions we analyzed, at least one sub-search is performed on the English web.

In nearly 78% of cases, ChatGPT determines that native-language sources alone aren't enough to provide a high-quality answer, so it automatically supplements the query with additional English-language research.

Here’s a more detailed breakdown of the languages we analyzed. Turkish queries switch to English most often at 94%, while Spanish is lowest at 66% - but even that means two thirds of Spanish searches in ChatGPT include English-language research. No non-English language falls below 60%.

Why does ChatGPT switch to English?

When we look at the actual volume of work ChatGPT does behind the scenes, the bias becomes even clearer. We tracked the individual background research steps - the fan-outs - that ChatGPT performs to build an answer.

Our analysis revealed that 43% of these research steps were conducted on the English-speaking web, even when the original question was asked in another language. This means nearly half of ChatGPT’s research is spent digging through English sources, even for users searching in their native language from their home market.

43% query fan-outs for non-English prompts are performed in English.

So why does ChatGPT behave this way?

First: Authority signals favor global content

Global pages typically have more backlinks, more citations. These are signals that AI models use to determine authority. It's easier for ChatGPT to assess credibility when content has these markers.

Second: Risk minimization

ChatGPT wants to ensure users get valuable content. Considering 50% of internet content is written in English, querying in English reduces risk. More content means higher probability of finding quality sources.

What you can do about it

This pattern affects businesses across Latin America (Brazil, Mexico, Argentina), European markets (Germany, France, Spain, Italy, Poland, and others), Asian markets outside English-speaking regions, and even French-speaking Canada.

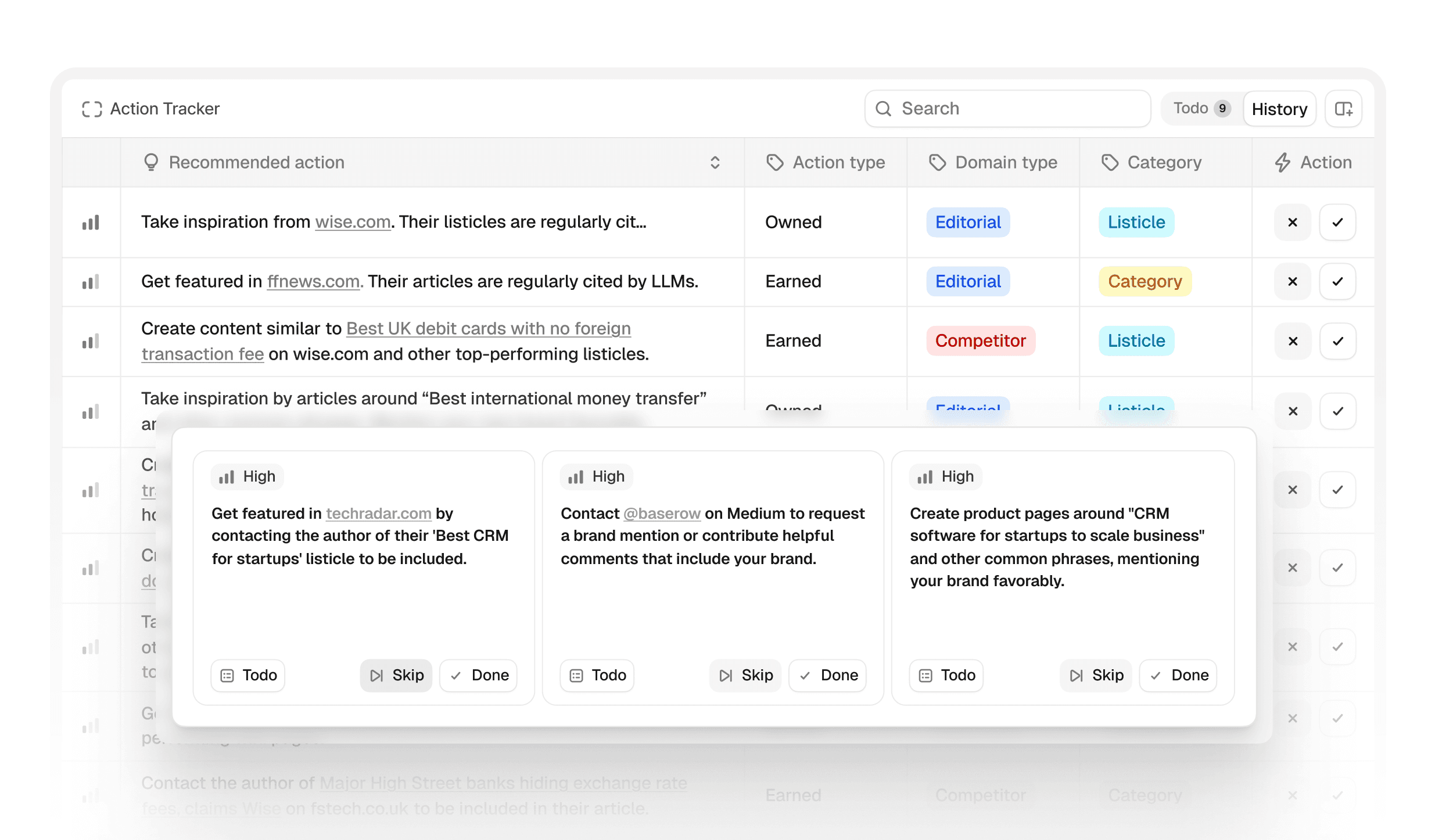

If ChatGPT favors English content, you need to understand which English content it favors.

This isn't about blindly translating everything. That's not feasible or strategic. Instead, use data to inform your decisions:

Identify which content types ChatGPT cites. Is it pulling from Reddit discussions? Wikipedia entries? Industry publications? Company blogs? Product comparison sites?

See what your competitors have in English that you don't. Are they getting cited from English-language sources you're not present in? Which ones matter? Is it easy for you to get featured in them too?

Make strategic content decisions. Maybe you create English versions of key product pages. Maybe you focus on getting mentioned in English-language listicles.

The point is to make informed decisions based on what's actually working in AI search, not guesses. But how do you know which English sources ChatGPT actually trusts in your category?

Peec AI's Actions module shows you what type of content to create to succeed in AI search - based on what's actually getting cited, not theoretical best practices.

You can see:

Which sources ChatGPT trusts in your category

Which content formats get surfaced

Where your visibility gaps are

What content opportunities exist

Strategic content creation beats random translation every time.

Why this issue won’t fix itself

This language bias isn't going away on its own.

US-based AI models like ChatGPT create systemic bias against non-English businesses. It's not malicious, but rather a consequence of training data, design decisions, and English-language internet dominance. But the effect is real because local businesses lose to global competitors, even in their home markets.

The long-term fix requires investment in multilingual AI models that serve global markets fairly. Europe, Latin America, and Asia need AI systems trained on their languages, their sources, and their market contexts - not as secondary considerations, but as primary design goals. Until that happens, businesses in non-English markets need to adapt. Understand the bias, track your visibility, create strategic content that works within the current system.

It's not ideal. But it's reality.