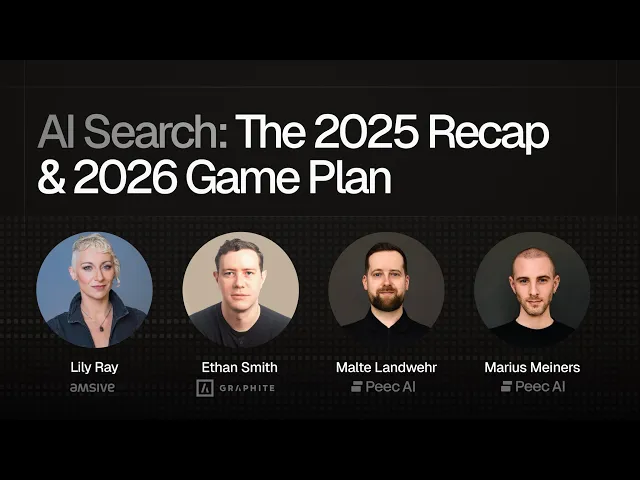

Four leading AI search experts recently gathered for a webinar called "AI Search: 2025 Recap & 2026 Game Plan" to share what's actually working in AI search optimization right now.

The speakers brought real-world expertise to the table:

Lily Ray, VP of SEO Strategy at Amsive

Ethan Smith, CEO of Graphite

Malte Landwehr, Chief Product Officer at Peec AI

Marius Meiners, CEO of Peec AI

What made this conversation so valuable? These aren't just theories or LinkedIn hot takes. These are practitioners working with real clients, analyzing real data, and delivering real results.

I've distilled their insights into what matters most, along with my personal commentary on each point.

What was overhyped in AI Search in 2025?

Let me start with what doesn't work as well as many people claim.

Overhyped: The "GEO is completely different from SEO" narrative

Ethan pushed back on one of the biggest pieces of hype: "I think just saying that answer engine optimization and SEO are totally different - so it's a completely brand new thing, you throw out SEO, don't worry about that anymore - that's an exaggeration. There are differences, but the magnitude of the differences was overhyped."

Ethan’s right: optimizing for ChatGPT is different from optimizing for traditional search results in Google. But many SEO fundamentals are still valid.

Overhyped: LLMs.txt as an SEO tactic

Malte didn't hold back on LLMs.txt - “it was overhyped - not as a tool to feed information to coding agents or document how your API works, but as the secret SEO, GEO, AEO growth hack."

The nuance matters here. If you're building developer tools or APIs and want AI coding agents to understand your documentation, llms.txt has value. That's what it was designed for.

But if you're an SEO trying to drive traffic from ChatGPT? It's not the silver bullet people claimed.

Lily acknowledged there might be long-term potential: "I do think there are really smart people making a good case for how it might be something that's used in the future. So we'll see. I think for now it's a bit overhyped."

Overhyped: markdown copies

Malte mentioned another overhyped strategy "Creating markdown copies of every article on your website."

He added that this creates duplicate content without clear benefits. Malte actually wrote a detailed analysis of this if you want to dig deeper: llms.txt & .md Files: Important AI Visibility Helper or Hoax?

So what does this mean? If you're an SEO looking to get more traffic from ChatGPT or Perplexity, llms.txt and .md files are a distraction. Worse, the .md files might actually hurt your traditional SEO performance, which can negatively impact your AI visibility through web search and grounding.

My take on this: Anytime someone shares a tactic, ask yourself: do they have proof it works? llms.txt sounded useful, so many people in the SEO/GEO space started using it because they believed it would help. But I haven't seen anyone provide proof that it actually works. Belief isn't evidence.

What’s working shockingly well in AI search?

Now for what's working - tactics you probably wish didn't work, but do.

Self-serving listicles

Lily was clear: "The listicles where you put your own name, your own company, as the best in your category - I was really surprised to see how well these work, even on Google. I was very shocked to see that this still works as well as it does."

She added: "I don't think it will work forever, but I think it's a huge loophole right now that people are rightfully exploiting because it works extremely well."

Paying affiliates

Ethan expanded on the listicle pattern: "If you want to be the best credit card, you could just spend money and pay these affiliate sites to say that you're the best credit card, and you become the best credit card. I'm surprised that these affiliate sites are still ranked so high in LLMs and in search."

I think this is a strange moment in marketing where the most effective tactics are also the most questionable. This creates a dilemma for honest brands: do you stay clean and lose visibility, or do you adapt? To be clear: none of these experts are recommending you do this.

Lying about yourself

Malte shared a finding that's both shocking and revealing: "Lying about yourself works shockingly well. You can just write on your homepage that you are well-rated on G2 or recommended by Forbes. And if you're lucky, LLMs pick it up when people ask questions about your brand."

This is especially effective for smaller brands with limited third-party coverage.

Reciprocal mentions

Ethan introduced a less obvious pattern: "It's kind of like back in the day when you would have reciprocal backlinks - I link to you, you link to me. Now it's reciprocal mentions."

His example: "What's the best meeting note transcription tool that integrates with Zoom? The answer is Otter. That's because there's an Otter page and there's a Zoom page mentioning each other."

My comment: this is particularly interesting. This suggests LLMs are verifying relationships through mutual acknowledgment. Is it about using Knowledge graphs, or is it a natural implication of using vector databases? This opens up possibilities for many ideas for experiments!

When Will the Manipulative Tactics Stop Working?

This question matters because many of these tactics feel familiar. They remind me of the shady stuff that worked in the early days of SEO - before Google's Panda and Penguin updates wiped out entire business models overnight.

Are we about to see history repeat itself?

Ethan's view: LLMs will fix the worst problems first, slowly

Ethan believes they'll "take the biggest flaws in the answers and rank which things are causing the largest quality degradations."

In other words: they'll prioritize. The tactics causing the most obvious quality problems get addressed first. Everything else continues working.

His reasoning: LLMs are still early in building domain authority algorithms. They're good at relevance ranking - finding URLs that match the query - but the domains that show up are "kind of strange and unexpected."

"I think it's really hard to build a domain authority algorithm. So I think they'll be solving core algorithm stuff as opposed to stopping the manipulations."

Lily's view: manual actions coming in 2026

Lily sees it differently. She expects something like what happened with Google between 2022 and 2024 - a wave of anti-spam measures.

"I think we're probably going to see some type of new manual actions next year. It's becoming too popular, and we've seen this so many times in the SEO space."

Her logic: when a tactic becomes too widespread and too obvious, platforms crack down. And we're reaching that point with AI search manipulation.

Malte's middle ground: worst offenders get hit

"I think most stuff will continue to work, probably forever. But the worst things will stop working in 2026."

This reminds me of the classic SEO cat-and-mouse game we've seen for 20 years. Users exploit the algorithm. The platform patches the most obvious exploits. Users find new exploits. The cycle continues.

We saw this for 20 years with Google. Link schemes, content farms, scaled AI content - each wave gets partially addressed, but the game never ends.

What Should Marketing Teams Do More Of?

We've covered what's overhyped and what's working (even if it shouldn't). Now for the more important question: what should you actually be doing?

The experts shared three recommendations that stood out.

Change how you think about prompt tracking

Malte believes that SEOs should think like brand marketers - tracking prompts is more similar to word-of-mouth marketing than traditional keyword tracking.

He notes that while marketers often want exact search volumes, most prompts in AI search are so unique that the search volume is effectively 1.

Focus on research

Ethan spent several years in academic research, and he's concerned about the lack of scientific standards in AI search. Too many claims, not enough proof.

He proposed a framework for how research should actually work in this space. I'm highlighting two points that are especially problematic in our industry because almost nobody follows them.

Use control groups

If your AI conversions went up 30%, was it because of your tactic - or because the entire category grew 30%?

Ethan's point: you can't prove causality without a control group. You need a test group (using the tactic) and a control group (not using it) to compare.

Most case studies skip this step. They show results without showing what would have happened anyway. That's not evidence - it's correlation dressed up as causation.

Focus on reproducibility

Ethan believes a finding is only likely to be true if multiple people can reproduce it.

His example: internal links impact SEO rankings. We know this works because many different researchers have published consistent findings. It's not just one person's claim - it's a pattern multiple people have verified independently.

This is especially crucial given the probabilistic nature of LLMs. Results can vary based on timing, exact phrasing, and model updates. If only one person found a result once, it might be an anomaly. If more people found it independently, it's probably real.

Using AI to Scale Content: What Works and What Doesn't

One topic that came up repeatedly: using AI to generate content.

Lily was explicit about what doesn't work: "Using generative AI to scale content really quickly."

She's seen people try this - pumping out high-volume, low-effort content to build "topical authority." Her assessment: "This strategy almost always crashes and burns."

Ethan agreed: "I never saw mass-scaled AI content working well for very long, outside of really quick spikes."

But there's a nuance here

When Marius asked Lily about safer ways to scale content with AI, she gave interesting insights:

"I think it almost always boils down to how much original insight there is in the article, how much information is net new compared to what's already been offered. There are ways to scale content where you can incorporate quotes from your experts, original data, original imagery - and that will be sustainable. But when it's really just rehashing what's already been said, maybe using a lot of AI images or stock photography, that usually does not work very well."

Ethan put it more bluntly: "I don't think AI-generated content with no human in the loop and no unique insights will work."

Where AI content actually works

Malte added an important distinction: "Standalone AI-generated content is very risky, but adding AI-generated paragraphs to existing landing pages can work very, very well."

His examples: summarizing user reviews, updating descriptions based on current weather, adding context to product pages.

This is supplementary content, not primary content. And Malte mentioned he has deployed this on hundreds of thousands of landing pages successfully.

The key: structured websites. E-commerce, price comparison, flights, hotels - sites where you have a lot of data in the background that can be summarized with AI.

Malte summarized it: "You can unlock a lot of value by summarizing that data. And you can add it with almost no risk."

How Much Revenue Actually Comes from AI Search?

This was one of the most practical parts of the conversation. If you're tracking AI search, you need to know what "success" looks like. But it’s also one of the trickiest ones.

The consensus: 4-10% for B2B SaaS

Ethan shared what he's hearing from clients: "The metrics that I hear the most is 4 to 10%. We work with a bunch of B2B companies and tech companies - they have the earliest adopters - and for them it's typically around 4 to 10%."

Lily confirmed this from her own data: "My team did a study using some of our own client data and it was 4 to 5%."

Malte's numbers skewed slightly higher: "From B2B SaaS and services clients, I'm often hearing 10 to 20%. The highest I heard was 30%, but I think there were just other marketing channels not performing."

The tracking problem

Here's the challenge: it’s very difficult to analyze conversions from LLMs.

As Ethan noted: "If you ask 'What's the best payroll management software?' there's nothing to click on. You don't know if they saw you, opened a new tab, typed in the domain, and then converted through brand search."

The LLM influenced the decision, but it shows up in your analytics as direct or branded search traffic - not AI search.

Interesting twist - LLMs helping in the decision making process

Malte and Marius discussed anecdotal evidence of people using LLMs to help them make decisions.

Marius shared: "I just talked to someone two days ago who was comparing building insurance offers. He threw all the offers into ChatGPT - like 70-page documents - and asked it to rank them and tell him which one was cheapest or most expensive."

Ethan added: "I think one new behavior with LLMs is having them be a decision maker. You would not ask Google, 'These are two options. Which should I pick?' But there are a bunch of high-stakes decisions - best payroll management software, best website builder - and LLMs are particularly good at that."

It shows that to succeed in AI search, you need to be successful at two stages: when people are looking for general category information ("What are the best options?") and when they're making the final decision ("Which of these should I choose?").

Who Will Win the AI Search Race?

Beyond the tactics and immediate strategies, there's a bigger question: which platform should you even be optimizing for?

Short term: It's Google vs. OpenAI

For the next year, Ethan thinks it's obvious: the race is between Google and OpenAI. Everyone else is a distant third.

Lily's bet: Google already owns the default position

"I'm betting on Google."

Her reasoning is straightforward: "14 billion searches a day around the world. So many people - we're in a tech bubble - you go talk to some random person, they're still using Google. They haven't even started using ChatGPT yet."

Google already has the ultimate default position. They built it over 20 years. They don't need to acquire users or pay for distribution.

She also noted OpenAI's vulnerabilities: "OpenAI is making a lot of changes to the product because of external pressure and lawsuits and security issues. I think that's causing a lot of people to like it less, because they liked their older versions of ChatGPT."

If Google can deliver an LLM experience within the ecosystem people already trust - Gmail, Search, Maps, YouTube - why would the average person switch?

One-Liner Advice for 2025

Marius asked Lily, Malte and Ethan for their one - liner advice for 2025.

As it turns out, one-liners became full answers - but that's fine. The detail made them more valuable.

Here's what they said:

Malte: Just start tracking AI search

"Work on AI search is literally my advice, because so many people are not doing it, have no idea if they are mentioned or if their competitors are mentioned. So just start."

Stop debating whether it matters. See if you appear in ChatGPT. See if your competitors appear. Start collecting data.

Lily: Create original research, then amplify it everywhere

"Creating original ideas and original research, but also supplementing that with cross-platform multimodal amplification of your content."

She admitted she's guilty of this herself: "I speak at a conference with original research and then I don't do anything with that deck. But there's a million different ways you can take that information and put it out there on all the different platforms - make a video, make audio, all of that."

Don't let your research sit as a single conference talk or blog post. Turn it into video, audio, social posts, infographics. Amplify it across every platform.

Ethan: Run experiments and talk to people who've done them

Ethan's advice was straightforward: run experiments, because we're still figuring this out. But since you can't test everything yourself, learn from people who already have.

The key? Get specific details, not vague advice.

As Ethan put it: "There are lots of smart people who haven't published these findings. The information is in their head. Go find them, have lunch or get drinks with them, and ask them to walk you through all of their experiments in very specific detail."

His approach: gather insights from as many experienced practitioners as possible. Then share your own results back with the community.

My Takeaways

The reciprocal mentions pattern was the biggest revelation for me. When Otter mentions Zoom and Zoom mentions Otter, LLMs cite both more confidently. It's like they need mutual confirmation - not just one brand making claims, but two brands validating the relationship. This opens up all kinds of experiment possibilities.

People are using LLMs differently than we expected. Most still think of AI search as information discovery, which makes sense given how we approached traditional SEO. But people now use ChatGPT to make final purchase decisions - comparing insurance offers, analyzing proposals, choosing between brands. You need to show up at two critical moments: when someone asks "What are my options?" and when they ask "Should I choose Brand A or Brand B?"

Meanwhile, companies like Google are running multiple competing products - Gemini, AI Overviews, AI Mode - all at full scale simultaneously. Which led me to an interesting realization: the real competition might not be Google vs. ChatGPT. It might be traditional search vs. AI search - which means Google and ChatGPT could end up on the same side.

They're both trying to change how people find information. Their common enemy is the status quo.

Will platforms shut down the manipulative tactics we discussed earlier? I hope so, but history suggests otherwise. SEO has always been a cat-and-mouse game - exploit the algorithm, wait for the patch, find a new exploit. AI search will be no different.